How Generative AI Powers Personalized Visual Content for Businesses

There was a time when having a single campaign photoshoot was enough. But the world changed: audiences fragmented, social media multiplied, and personalization became the foundation of modern marketing. Suddenly, one-size-fits-all visuals stopped working.

Today, a brand’s visual ecosystem has to stretch across dozens of formats from high-converting Amazon product photos to emotionally rich Instagram stories, Pinterest mood content, and personalized ads that speak differently to each audience segment. The demand isn’t just for more content; it’s for smarter, tailored content that reflects what each customer cares about at that specific moment.

Generative AI technology bridges what once seemed like opposing forces: data and creativity. It allows teams to produce hundreds of visuals adapted to platforms, audiences, and campaigns, all while staying true to the brand’s story, timing, and on budget.

Instead of spending months planning and shooting every variation manually, brands can now ideate, test, and generate visuals faster than ever before. Let’s explore this shift.

The Data Behind Personalized Visuals

How Audience Data Shapes Visual Decisions

Personalized visual content doesn’t start with a photoshoot or AI prompt; it starts with data.

Every click, view, and scroll leaves behind small visual preferences: color choices that catch attention, camera angles that hold it, and moods that convert. Behavioral data, demographics, and engagement metrics together paint a picture of what each audience wants to see, and more importantly, what they associate with a brand they trust.

Generative AI helps decode these signals. It can process massive datasets like social interactions, search intent, and ad performance, and reveal which visual tones and compositions resonate with different groups.

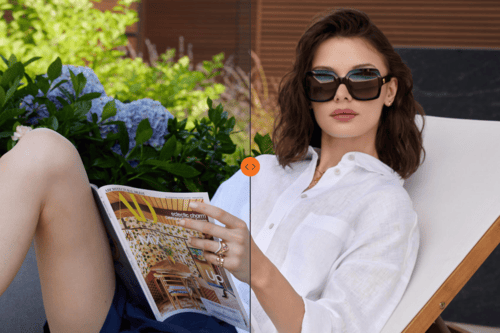

Take a skincare brand, for instance. AI might find that Gen Z it-girls who live in a Y2K revival and value authenticity wrapped in playfulness, engage most with visuals that mix soft flash photography, slightly chaotic layouts, and nostalgic digital textures reminiscent of early 2000s magazines or disposable cameras.

-visuals-

Meanwhile, millennial professionals tend to prefer clean minimalism: sleek packaging shots, muted palettes, and editorial lighting that communicates reliability and premium care.

-visuals-

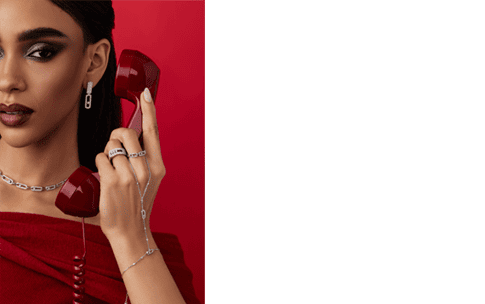

The same logic applies to a jewelry brand. Data might show that customers shopping for gifts respond best to storytelling like romantic setups, soft-focus scenes, and human touch, while self-purchasing customers react more strongly to empowering, confident imagery that emphasizes detail and craftsmanship.

By translating these insights into a visual strategy, brands no longer guess what their audience might like, because they know.

Connecting Data with Creative Direction

The real power comes when this data meets creative intuition. AI doesn’t just collect numbers, but also helps interpret them visually. Creative teams can use these insights to build moodboards, instantly visualizing concepts that balance performance data with brand personality. Before a single shoot or generation begins, they can test multiple aesthetic directions and see which align with both metrics and message.

This data-backed process allows brands to be consistent and flexible. The brand DNA stays intact, but its visuals can shift fluidly across audiences, platforms, and seasons. It’s a visual system that evolves through feedback rather than guesswork, one that makes every campaign more precise than the last.

-visuals-

Generative AI as a Visual Production Powerhouse

In the past, scaling visual content meant scaling teams, budgets, and time. Each campaign required separate shoots, retouching, and production timelines, a process that simply couldn’t keep up with the pace of digital marketing. Brands found themselves stuck between two bad options: either invest heavily in large-scale production or settle for repetitive, generic imagery.

AI generative visual technologies are changing this equation completely. But not a basic AI platform, but real studios’ technologies and frameworks based on AI. LenFlash is a pioneer in adapting Generative AI to professional visual content creation. Our creative team produces localized, seasonal, and segmented visuals at a fraction of the time. What once took a month of coordination can now be ideated, tested, and produced within a week.

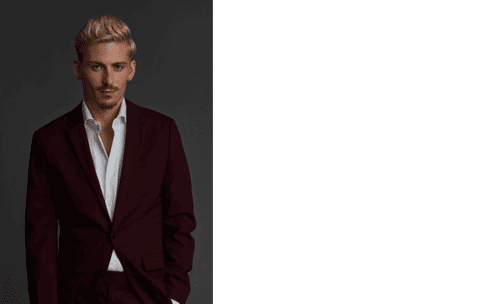

Imagine this: a fashion brand can generate multiple campaign directions, like a summer editorial for Europe, a minimalist capsule for Japan, and a bold streetwear story for the US, all aligned with the same brand DNA but visually tuned to each market’s aesthetic expectations. This ability to scale nuance is where AI-powered visual content creation works outstandingly. Instead of diluting the brand, it lets every segment see the version of your brand that feels made for them.

And when it comes to performance marketing, Generative AI makes A/B testing and ad personalization finally manageable at scale. Brands can now afford to order dozens of “content variants” with subtle changes in framing, styling, or background to test what drives more clicks or conversions. Each test feeds back into the system, refining future visuals through data-driven iteration.

Adapting for Every Platform and Audience Segment

Every platform demands its own language. What works on Pinterest won’t work on Amazon. AI helps brands adapt their visuals seamlessly across this fragmented landscape.

A single AI-powered photoshoot can be transformed into ten different visual executions. Each version carries the same product accuracy and art direction, but the emotion and format shift for context. That’s what keeps brand visuals relevant without overwhelming creative teams.

-visuals-

Merging Human Art Direction with AI Speed

There’s still a common misconception that AI will replace humans in visual content creation. In reality, it replaces repetition, not experience and direction. Human-led art direction remains the center of quality and meaning, defining the story, tone, and emotion behind each image.

At Lenflash, this balance is core to how we work. Every project begins with real product photography, captured in professional setups, whether on mannequin, or flat lay. From there, art directors define the visual story: who the image speaks to, what feeling it should evoke, and how it connects to brand DNA.

Then AI steps in: generating models, styling variations, and scenes around those authentic products. It brings speed and flexibility to what used to be weeks of production work. Retouching and refinement complete the process, ensuring every visual is still human-approved and brand-accurate.

That’s the real power of merging AI execution with creative precision, a workflow that’s faster, more cost-effective, and creatively limitless without losing the human touch that gives visuals their depth.

-visuals-

Data-Driven Personalization in Action

A fashion brand, for example, can test subtle variables to see what resonates with its audience. The insights gathered from these micro-tests flow back into the creative system, informing future AI generations and even future photoshoots.

This visual data loop turns creative production into an evolving system. Rather than starting from zero with each campaign, every piece of content feeds the next one, refining aesthetic decisions, audience segmentation, and brand tone over time. What once required intuition alone now relies on measurable creative intelligence.

Now imagine if the system didn’t just react to what worked, but anticipated what will work next. That’s the idea behind predictive visual creation. With the help of AI, creative teams can analyze better campaign history and performance metrics to forecast which types of visuals are likely to perform well for a specific audience or platform.

As these systems mature, brands move toward self-improving creative ecosystems, where every new image generation or shoot becomes more strategic than the last. Over time, this creates a feedback-driven brand identity: one that evolves dynamically with audience behavior, rather than lagging behind it. Brands start creating what their customers actually want to see before customers even realize it themselves.

AI-Generated Content in Brand Ecosystems

One of the biggest challenges modern brands face is visual fragmentation. When you produce hundreds of assets per month for social media, marketplaces, ads, newsletters, and partner platforms, it’s easy for consistency to slip. Different teams, timelines, or contractors can unintentionally alter the tone, lighting, or storytelling, creating a patchwork of visuals that no longer feel unified under one brand identity.

At Lenflash, our approach connects directly with LF Cloud — our content management system allows for revision, storage, tagging, and organization of all assets. It gives opportunity to marketing, design, and product teams to work from the same source of truth.

This level of integration creates a brand ecosystem that’s both cohesive and agile, a structure where content doesn’t just multiply, it stays connected to the same strategic vision.

Ethical and Authentic Use of AI Visuals

As AI-generated visuals become more common, authenticity has become a defining question. Customers are increasingly aware of digital creation, and they expect transparency and honesty from the brands they support.

The key is to use it responsibly. Authentic AI content should always reflect the true product: its shape, material, reflection, and color. The goal is to extend the storytelling possibilities, not to distort reality.

That’s why we pair professional AI workflows with real product foundations. At Lenflash AI-generated visuals are always built around authentic photography with real textures, accurate proportions, and verified color references. From there, AI extends the environment, adds storytelling layers, or visualizes lifestyle context while maintaining product truth.

This balance ensures AI supports creativity without breaking trust. In the long term, ethical use of AI becomes part of brand credibility.

-visuals-

Creative Intelligence as the Next Level of Commercial Visuals

The next evolution of visual marketing is not just about automation alone, but rather about creative intelligence. This concept describes the fusion of three layers that once operated separately: brand strategy, creative direction, and AI-powered workflow. Together, they create a system where visuals are beautiful, efficient, and strategically alive.

In practice, creative intelligence means your visual ecosystem continuously learns, reacts, and refines. Brand strategy defines the emotional and commercial goals: what your visuals should communicate and to whom. Creative direction translates those goals into storytelling, tone, and design. Then AI helps the visual team to take that creative logic and apply it across every channel, generating, testing, and optimizing visuals at scale.

It’s a cycle of insight and expression. When an ad variation performs better in one market, the system helps marketers to identify why: maybe it’s color psychology, local fashion cues, or composition trends. That insight feeds back into the next creative decision, informing art direction for the next batch of visuals. Over time, the brand develops a living visual intelligence: a style that feels human but functions like data.

For business, this marks a profound shift. Visuals become a responsive part of business strategy. Creative intelligence helps brands stay visually relevant in real time, balancing consistency with adaptability, storytelling with precision, and emotion with measurable investment return.

If you want to explore how this approach can elevate your content, from personalized campaign visuals to AI-powered on-model catalogs, discover Lenflash AI Visual Content Services, where human-led art direction, visual experience, and technical skill meet AI capabilities to produce high-performing, brand-true visuals at scale.

FAQ: Generative AI and Personalized Visual Content

How does Generative AI help brands create personalized content faster?

Generative AI automates many stages of visual production, drastically reducing the time needed to produce campaign visuals. It allows brands to instantly generate multiple versions of the same concept, adapt it to various audience segments, and deploy platform-specific visuals without repeating the entire production cycle. What once required full reshoots can now be achieved through smart AI variation, guided by human direction.

What data could be used to customize AI-generated visuals?

AI uses anonymized, aggregated data such as audience demographics, behavioral trends, engagement metrics, and search intent. This data helps determine which tones, compositions, and visual styles resonate with different audience groups. For instance, AI can detect that a particular editing style performs better among Gen Z audiences or that warmer backgrounds drive more conversions for gifting campaigns.

Can AI-generated visuals look authentic and on-brand?

Yes, when created under proper art direction and AI-powered workflow. At Lenflash, for example, every AI-based visual starts from genuine photography and maintains full alignment with the brand’s tone and visual identity, ensuring that the result is commercially effective.

How do AI visuals improve A/B testing results?

Instead of testing just copy or CTA differences, brands can experiment with background color, lighting style, or framing. Because AI can generate these variations rapidly, brands can collect performance data faster and refine visuals based on what truly resonates, leading to higher conversion rates over time.

How can businesses start implementing AI in visual production?

Partnering with a studio experienced in AI-driven production, like Lenflash, helps ensure that creative direction, data, and technology work together seamlessly.